Notes on Effective Altruism

Effective altruism is a philosophy and social movement that encourages people to dedicate their time and money to the most effective causes in the world.

There are many ways to get involved with effective altruism. The easiest way to start is by reading about effective altruism and attending local events.

For people new to effective altruism, worth checking out the following reads:

- effectivealtruism.org - start here

- 80000hours.org - or here

- openphilanthropy.org/research

- givewell.org

- The Most Important Century by Holden Karnofsky

- forum.effectivealtruism.org

- Why your career is your biggest opportunity to make a difference to the world

- EA Project Ideas Future Fund was interested to fund

- Concave and Convex Altruism by Fin Moorhouse

- Research I’d Like to See by Fin Moorhouse

- Quadratic Funding x Effective Altruism by Gitcoins Kevin Owocki

- EA visual data overview

→ Online course to learn the core ideas of Effective Altruism

Values

The values that unite effective altruism are.

- Prioritization: We should try to use numbers to weigh how much different actions help in order to find the best ways to help, rather than just working to make any difference at all.

- Impartial altruism: We should aim to give everyone’s interests equal weight, no matter where or when they live, when trying to do as much good as possible

- Open truthseeking: We should be constantly open and curious for new evidence and arguments, and be ready to change our views quite radically, rather than starting with a commitment to a certain cause, community or approach.

- Collaborative spirit: It’s more effective to work together than alone, and to do so we must uphold standards of honesty and friendliness. Effective altruism is about being a good citizen and working towards a better world, not achieving a goal at any cost.

Effective altruism is about finding the best ways to help others, and these values guide that search.

Philosophical underpinnings of Effective Altruism

Longtermism

Longtermism is the view that we should be doing much more to protect future generations. This is because our actions may predictably influence how well this long-term future goes. Longtermism encourages consideration of the long-term future and potential impacts of present actions on future generations.

- future people matter just as much as those who have lived in the past

- the future could be vast, and there are many people who have not yet been born

- our actions can influence the future in a positive or negative way.

Longtermism is a family of views that share a recognition of the importance of safeguarding and improving humanity’s long-term prospects. Researchers, advocates, entrepreneurs, and policymakers who are guided by the longtermism perspective are beginning to think seriously about what it implies and how to put it into practice. read more about longtermism

Consequentialism

Is a moral philosophy that asserts the ethical value of an action is determined solely by its outcome or consequences. In other words, the ends justify the means. If the consequences of an action result in a positive outcome, then the action is considered morally right. Conversely, if the action leads to a negative outcome, it’s considered morally wrong. The best action, according to consequentialism, is the one that produces the most good or least harm for the greatest number of people.

Deontology

While EA’s primary focus is on consequences, a deontological perspective adds an emphasis on duties and principles that must be adhered to, even if they may not maximize overall well-being.

Virtue Ethics

EA can also align with virtue ethics, which stress the cultivation of moral character and virtues such as compassion, justice, and empathy, which can motivate and sustain altruistic actions.

Rights-Based Ethics

Even as EA focuses on maximizing well-being, it can also incorporate rights-based ethical considerations, respecting individual rights and autonomy in the pursuit of altruistic actions.

Egalitarianism

EA’s consequentialist approach can be complemented by egalitarianism, focusing on equality, fairness, and distributive justice. This perspective emphasizes the importance of reducing systemic disparities and promoting equal opportunity.

Care Ethics

EA can be informed by care ethics, emphasizing the moral significance of personal relationships, empathy, and care for others. This perspective can serve to highlight the emotional aspects of ethical decision-making and ensure a balance between broad, impartial concern and specific, personal duties.

Neglectedness

This is a principle often used in EA thinking, suggesting that efforts should focus on causes and issues that are neglected but have high potential for impact. This approach aims to find areas where altruistic efforts can have the most significant marginal impact.

Sufficientarianism

Sufficientarianism posits that everyone should have enough - a level of well-being considered “sufficient.” Within EA, this could guide efforts towards ensuring a minimum standard of well-being for all.

Pluralism

Ethical pluralism acknowledges that there are many different valid moral perspectives and that no single moral theory can capture all moral truths. This can encourage a more flexible and inclusive approach within EA, integrating insights from diverse moral philosophies.

Prioritarianism

Prioritarianism is the moral philosophy that prioritizes the well-being of those who are worse off. In terms of effective altruism, this means focusing resources and efforts on helping those who are most disadvantaged. This concept is often used in discussions about resource allocation and justice, and can be a significant guiding principle in EA actions.

Utilitarianism

Utilitarianism can be destructive as it is based on the faulty premise of reductionism. It is attractive because it seems simple, but it often leads to terrible outcomes because it doesn’t take into account the fact that different people have different preferences. A better approach would be to focus on what is good for the majority of people, or at least what is good for the most vulnerable and disenfranchised people.

I believe that it is better to have a diversity of values to optimize for instead of overly focusing on maximising single measures, such as Quality-Adjusted Life Years (QALYs). World view diversification seems one valuable approach effective altruism offers in this direction, ideally we take multiple preferences into account and enable pluralism of values.

→ What is the alternative to utilitarianism? by Alexey Guzey

- “If your goal is to maximize utility of conscious beings and there appear beings utility of which is easier to maximize than utility of humans, you’re going to switch sides.”

- “Utilitarianism is exploited by beings that can change their utility functions (this actually happens in real life with utilitarians when they encounter people with “strong preferences”).”

- “From my observations, taking utilitarianism seriously means giving up its foundational elegance and instead pursuing all kinds of wacky heuristics that make it actionable.”

How can you take action?

“There are many ways to take action, but some of the most common ways people try to apply effective altruism in their lives are by:

- Choosing careers that help tackle pressing problems, or by finding ways to use their existing skills to contribute to these problems, such as by using advice from 80,000 Hours.

- Donating to carefully chosen charities, such as by using research from GiveWell or Giving What We Can.

- Starting new organizations that help to tackle pressing problems.

- Helping to build communities tackling pressing problems. “

See a longer list of ways to take action for more ideas.

Our top 3 lessons on how not to waste your career on things that don’t change the world

As an intro to existential risks, I put together existentialrisk.info

Nanotech x-risks

- very rarely discussed within existential risks, but plausible its worth investigating

“Two-sentence summary: Advanced nanotechnology might arrive in the next couple of decades (my wild guess: there’s a 1-2% chance in the absence of transformative AI) and could have very positive or very negative implications for existential risk. There has been relatively little high-quality thinking on how to make the arrival of advanced nanotechnology go well, and I think there should be more work in this area (very tentatively, I suggest we want 2-3 people spending at least 50% of their time on this by 3 years from now).”

Books

- 80,000 Hours: Find a fulfilling career that does good

- Precipice by Toby Ord “makes the case that protecting humanity’s future is the central challenge of our time”

- Doing Good Better: How Effective Altruism Can Help You Help Others, Do Work that Matters, and Make Smarter Choices about Giving Back

- Effective Altruism: Philosophical Issues (Engaging Philosophy)

- What We Owe the Future by Will MacAskill

- The Alignment Problem: Machine Learning and Human Values by Brian Christian

Donating

To support a range of smaller initiatives in different cause areas such as existential risk reduction or effective altruism broadly, I can recommend the donation funds run by the effective altruism foundation with analysts evaluating the most impactful efforts to donate to, or their partner funds such as the climate fund.

A great and easy to use platform to donate is every.org Can recommend joining an EA conference and your local community to share and discuss ideas.

My case for donating to small, new efforts

I think the average donor has very little impact when they donate to big, established efforts in traditional philanthropy, such as Greenpeace or efforts such as Against malaria in effective altruism. I think the biggest impact comes from the equivalent of angel investing, but for funding novel philanthropic initiatives that could potentially be extremely impactful in relevant cause areas, but are underexplored and underfunded.

On reflection for myself, donating in the first few months of the project’s existence to projects such as Ocean Cleanup, NewScience or Taimaka was probably much more impactful than donating to big, established efforts. I would also recommend novel, potentially impactful initiatives to other donors and foundations for funding. Once a billionaire or big foundation is funding a project, it probably doesn’t require your donations anymore.

In the book Effective Altruism: Philosophical Issues (Engaging Philosophy), Mark Budolfson and Dean Spears make this case elogquently in their paper “The Hidden Zero Problem: Effective Altruism and Barriers to Marginal Impact”. I highly recommend reading the book.

I do think that efforts such as the EA funds or ACX Grants are a decent passive way to have a similar impact, as they support these small, novel projects.

Some ea improvement suggestions:

- too much emphasis on individual actions over systemic change

- not enough emphasis on public and policy engagement and change

- should strive to be more inclusive and diverse, and take more values and goals into account

- enable collaborative ea-adjacent movements to development with similarly strong community and epistemics towards a movement focused on maximising meaning / happiness or technological and economic progress (eg. progress studies)

- encourage people to come up with their own convictions how to improve the world, being open to challenge core beliefs within the community, and not blindly follow, but think for oneself and from first principles

Donation habit, patient philanthropy, impact angel investing and dimishing returns

My philosophy is to develop a regular habit of donating, and being broad in considering many different cause areas and efforts (and thus values), while focusing on growing my wealth to have the biggest long-term impact with my own projects, angel impact investing and long-term donations following patient philantrophy, although I believe that there are diminishing impact returns: the first $1-10k for a new effort goes further than $1m to a big established one that is on the radar of big donors, so I try to support early, small, unrpoven projects or individuals as much as possible.

—

A list of organization I find worth supporting:

Meta: Evaluation, Effective Altruism Movement

- EA Infrastructure Fund

- Founders Pledge

- 80.000 Hours

- Animal Charity Evaluators

- Generation Pledge

- Centre For Effective Altruism

- Effective Altruism Foundation

- Rethink Charity

- Rethink Priorities

- Legal Priorities Project

Long-Term: X-Risk, Ai Risk, Nuclear Etc.

- EA Fund Long-Term Future

- Nuclear Threat Initiative

- Future Of Life Institute

- Centre For Human Compatible Ai

- Berkeley Existential Risk Initiative

- Machine Intelligence Research Institute

- Centre For Health Security

- Founderspledge Patient Philanthropy

Global Health And Development

- EA Fund Global Health And Dev

- Malaria Consortium

- Against Malaria Foundation

- Givewell

- Givewell Max Impact Fund

- Strong Minds

- Evidence Action

- Kickstart.org - donating irrigation tools to grow harvest for poorest farmers

Science, Tools

- [Founders Pledge Science & Tech Fund]

- New Science

- Maps Mental Health

- Qualia Research

- Mars Society

- Wikipedia

- Our World In Data

- Impetus Grants: Fast Longevity Research Grants

Climate

- Spark Climate - Ryan’s top recommendation

- Founderspledge Climate Change Fund

- Clean Air Task Force

- Terra Praxis

- Cool Earth

Great Videos

Existential Risk: Managing Extreme Technological Risk

Holden Karnofsky - Transformative AI & Most Important Century

Prospecting for gold | Owen Cotton-Barratt | EAGxOxford 2016

Great Videos About Specific Initiatives

The Nuclear Threat Initiative

Clean Air Task Force

—

- List of organizations i supported(with a range of small amounts)

Appendix: Valuable critiques and improvement suggestions

Notes on EA by Michael Nielsen

- EA’s emphasis on quantifiable outcomes can undervalue less measurable but significant contributions (e.g., the arts, personal fulfillment).

- Risk of creating a ‘misery trap’ for adherents, who may feel constant stress about not doing ‘enough’ good.

- EA’s utilitarian framework may oversimplify complex moral decisions, leading to potentially misleading or reductive conclusions.

- The movement’s strong emphasis on maximizing good might overlook the importance of individual well-being and personal choices.

Questioning EA’s Moral Utilitarianism:

- The utilitarian approach of EA, focusing on ‘maximizing good’, often ignores the pluralistic nature of moral values and ethical complexities.

- EA’s reliance on a single, overarching principle can be overly restrictive and may not accommodate diverse personal moral frameworks.

Issues with EA’s Homogeneity and Approach:

- Potential overalignment with existing power structures and technocratic capitalism, which might bias EA’s priorities and strategies.

- Concerns about EA’s adaptability and inclusiveness, given its focus on a specific set of values and methodologies.

- EAs central principles can lead to stress and a sense of inadequacy among its followers. He proposes that EA might need to be part of a broader life philosophy rather than the sole guiding principle.

Nielsen acknowledges the meaningfulness and attraction of EA as a life philosophy but points out its limitations and the need for a more balanced approach to living a fulfilling life while contributing to the greater good. He calls for a more nuanced understanding of what constitutes ‘doing good’ and how it fits into a broader life philosophy.

Threads or articles

- Criticism of effective altruism

- Criticism and Red Teaming Contest

- Winners of the EA Criticism and Red Teaming Contest

- Effective altruism gave rise to Sam Bankman-Fried. Now it’s facing a moral reckoning.

- What we don’t owe the future: Longtermism is a fantasy

- A Systematic Response to Criticisms of Effective Altruism in the Wake of the FTX Scandal

Effective altruism in the garden of ends

- The author’s journey to EA led them to question the philosophy, eventually concluding that the movement needs to do a better job of supporting its members. They recount how they eventually realized that their sense of obligation to do the most good possible was coming from themselves, and that it was possible to put down the “psychological whip” of obligation and still have a desire to help. They also explore the idea that one can have multiple ends, and that it is possible to balance these ends without sacrificing any of them. The author is talking about the idea of a community that supports and helps grow each other’s “ends” (goals, projects, etc.), which includes but is not limited to effective altruism. This community would be based on mutual support and cooperation, rather than competition, and would work to find ways to minimize tradeoffs between different goals.

Tensions between moral anti-realism and effective altruism

Many effective altruists think that maximizing utility is the only objective good, but the author of this essay suggests that this contradicts their other belief that there is no such thing as objective moral truth. The author argues that these effective altruists are wrong about how human minds work, as humans are not likely to value all beings equally. The author suggests that, while effective altruists may have other intrinsic values, the only thing that they value as an end in and of itself is the utility of conscious beings.

- The Reluctant Prophet of Effective Altruism

- The Effective Altruism movement is not above conflicts of interest

- Meant to Keep Malaria Out, Mosquito Nets Are Used to Haul Fish In

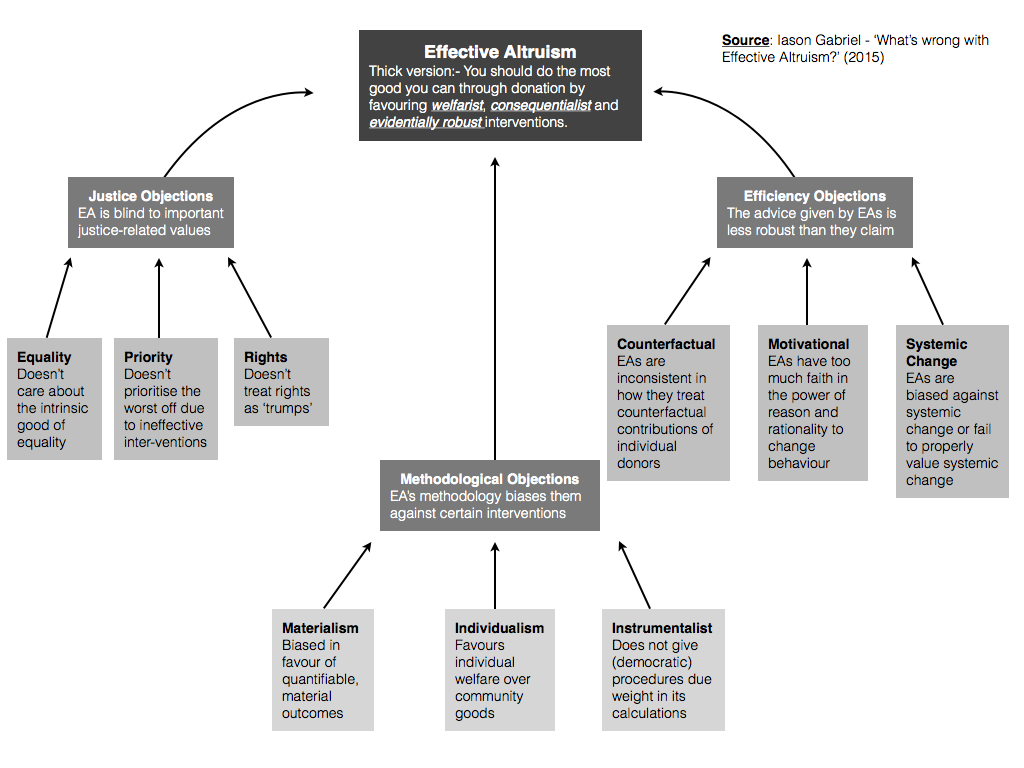

Effective Altruism: A Taxonomy of Objections

“I’ll go through each branch of this taxonomy in detail in future posts. For now, I’ll give a general overview, starting with the justice branch:

Justice Objections: The welfarist/consequentialist philosophy favoured by EAs renders the movement blind to important, justice-related values (i.e. values that affect how the benefits of charitable interventions get distributed and how they intersect with other moral concerns). There are three more specific forms of this objection:

- Equality: EA only values equality in instrumental terms, i.e. it is not sensitive to the intrinsic good of equal distributions of welfare.

- Priority: Although EAs purport to give priority to the poorest of the poor, they are unlikely to do this in practice. The desirability of a charitable intervention is, for EAs, always determined by its effectiveness (i.e. the marginal gain in welfare) and policies focusing on the worst off are unlikely to be the most effective according to this metric.

- Rights: EAs are consequentialists and so do not give adequate weight to individual rights in their moral assessments. They may view the protection of rights as a convenient heuristic for achieving welfare gains, but they are willing to override rights when the consequences justify this. In short, EAs do not treat rights as trumps.

This brings us to the methodological branch. This may be the one I find most interesting:

Methodological Objections: The tools EAs use to assess and evaluate charities end up biasing them in unfavourable directions. Three of these biases are apparent in the work of EAs:

- Materialism: EAs favour causes with tangible, quantifiable and easily measurable goals. This biases them away from causes with less tangible benefits. It may also bias them in favour of questionable, but evidentially tractable, metrics of evaluation (e.g. DALYs)

- Individualism: EAs favour causes with benefits for individuals, leading them to ignore or undervalue community-level goods. But these should not be ignored as they form an important part of the good.

- Instrumentalism: EAs are focused on achieving certain outcomes, not so much on the procedures that lead to those outcomes. This often leads them to favour technocratic as opposed to democratic interventions, on the grounds that the former are cleaner and more efficient than the latter. This ignores the value of democratic procedures and, possibly, contributes to bad governance in donee countries.

And then, finally, we have the efficiency branch, which is also pretty interesting to me as I find myself intuitively at odds with some of the advice given by EAs but unable to fully articulate my intuitive concerns:

Efficiency Objections: The advice given by prominent EA organisations is less robust and less efficient than they suppose. This is important because the EA movement has taken on the social responsibility of providing such advice to its adherents. Again, this objection comes in three main forms:

- Counterfactuals: EAs often rely on counterfactuals when giving advice. For example, their earning to give advice is premised on questions like ‘what difference would it make if I worked with a charity and helped people directly versus working in finance and donating the money I make?’. But they are inconsistent in their treatment of counterfactuals, sometimes neglecting similar questions like ‘what difference would it make if I donated to one of GiveWell’s top charities versus making another donation?’.

- Motivation/Psychology: EAs have great faith in the power of reason and rationality to change how people behave, but they may be wrong to do so when the movement is so young and the existing adherents so self-selecting. This may affect their ambitions of creating a truly mass social movement.

Systemic Change: EAs are often quite reactionary in their advice: favouring neglected causes because of their marginal utility, but moving onto other interventions when a sufficient mass of people address the previously neglected cause. This leads them to ignore or misvalue the importance of systemic change in making the world a better place.”

- Effective Altruism: A Taxonomy of Objections

What comes after EA?

What's comes after EA?

— ellie (@ellie__hain) November 14, 2022

A movement that isn't driven by eliminating suffering, but celebrating life. A movement that lifts people into building a more beautiful world, rather than crushing them into depression with continuous gloom and doom.